[Items] - Scrapy中数据的传递

摘要:关于Scrapy中数据的传递,重点关注scrapy.Field(serializer=serialize_text)中serializer的用法

<!--index-menu>

这节简要的讲讲Scrapy中的Items,在Scrapy中它就类似于一个字典承当着爬虫中数据项的定义、数据接收和分发工作。

一般从数据为出发点,要做到:

- 分析有哪些数据

- 定义用于存储一组数据的容器Item

- 从网页中获取结构化数据

- 将数据传给Item

- 输出Item

以例子展开:

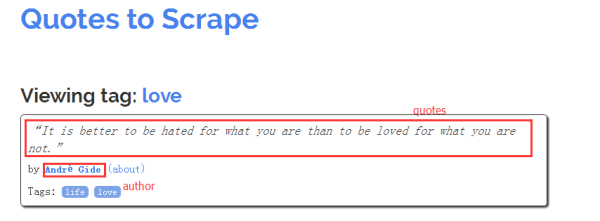

还是爬取quotes和author,那我们只要像QuotesItem类那样定义Item,然后在爬虫中实例化QuotesItem并接收数据和输出数据即可。

# -*- coding: utf-8 -*-

import scrapy

class QuotesItem(scrapy.Item):

text = scrapy.Field()

author = scrapy.Field()

class QuotesSpider(scrapy.Spider):

name = "quotes"

allowed_domains = ['toscrape.com']

custom_settings = {

'FEED_EXPORT_ENCODING': 'utf-8',

'FEED_URI': 'quotes.jsonlines',

}

def __init__(self, category=None, *args, **kwargs):

super(QuotesSpider, self).__init__(*args, **kwargs)

self.start_urls = ['http://quotes.toscrape.com/tag/%s/' % category, ]

def parse(self, response):

quote_block = response.css('div.quote')

for quote in quote_block:

text = quote.css('span.text::text').extract_first()

author = quote.xpath('span/small/text()').extract_first()

item = QuotesItem()

item['text'] = text

item['author'] = author

yield item

next_page = response.css('li.next a::attr("href")').extract_first()

if next_page is not None:

yield response.follow(next_page, self.parse)看到parse()方法中的:

item = QuotesItem()

item['text'] = text

item['author'] = author对就是这么简单,这个效果和我们之前写的dict是一样的:

item = dict(text=text, author=author)那Item中的Filed还有哪种用法,对还有一个格式化可选参数,举个例子:

假如我只想提取text中单词的任意5个单词做为输出,那该怎么做?

从Item的定义上:

text = scrapy.Field(serializer=serialize_text)其中serializer 就是可选参数,serialize_text 作为函数处理传给 QuotesItem['text'] 的值,(实际上 serializer 只有在使用 Item Exporters 时才有用,在后面我介绍的爬虫项目Item Pipeline - 爬虫项目和数据管道 中,要想在 item 上定义方法还需要使用Item Loaders - 数据传递的另一中方 中的 Item Loaders)我们定义下 serialize_text() 函数:

得到的结果:

import random

def serialize_text(text):

word_list = text.replace(u'“', '').replace(u'”', '').split()

return random.sample(word_list, 5)整体代码:

# -*- coding: utf-8 -*-

# filename: Quotes_Spider.py

import scrapy

import random

def serialize_text(text):

word_list = text.replace(u'“', '').replace(u'”', '').split()

return random.sample(word_list, 5)

class QuotesItem(scrapy.Item):

text = scrapy.Field(serializer=serialize_text)

author = scrapy.Field()

class QuotesSpider(scrapy.Spider):

name = "quotes"

allowed_domains = ['toscrape.com']

custom_settings = {

'FEED_EXPORT_ENCODING': 'utf-8',

'FEED_URI': 'quotes.jsonlines',

}

def __init__(self, category=None, *args, **kwargs):

super(QuotesSpider, self).__init__(*args, **kwargs)

self.start_urls = ['http://quotes.toscrape.com/tag/%s/' % category, ]

def parse(self, response):

quote_block = response.css('div.quote')

for quote in quote_block:

text = quote.css('span.text::text').extract_first()

author = quote.xpath('span/small/text()').extract_first()

item = QuotesItem()

item['text'] = text

item['author'] = author

yield item

# 下面三句等同

# yield {"text":text,"author":author}

# yield QuotesItem({"text":text,"author":author})

next_page = response.css('li.next a::attr("href")').extract_first()

if next_page is not None:

yield response.follow(next_page, self.parse)运行脚本:

from scrapy import cmdline

cmdline.execute("scrapy runspider Quotes_Spider.py -a category=life".split())摘抄自:Items - Scrapy中数据的传递 - 知乎,感谢作者

本作品采用 知识共享署名-相同方式共享 4.0 国际许可协议 进行许可。